I’ve been a software engineer for roughly twenty years now. For most of my career I have worked on what many would consider “legacy code”; applications such as payroll calculations and some embedded applications. Nothing that is on the bleeding edge of technology. This has often put me solidly in the camp of those resistant to new technologies because I’ve needed to build on an old code base with techniques and tools that have been proven to work. Having seen AI rise in power and productivity in the form of LLMs I realized that I cannot continue in my career without getting some level of familiarity with the technology. As such I’ve installed Ollama and Open Webui and have started playing with LLMs locally.

What are my goals for using LLMs?

In the short term my goals are:

- Run an LLM locally so that I don’t have to spend a lot of money to familiarize myself with the technology

- Help write this post to practice writing queries for text

Getting the tools installed

Presently the tools I am using are Ollama, Docker Desktop, and Open WebUI.

Installing Ollama Locally

I am running Ollama directly on my Windows machine so that I don’t have to deal with passing my GPUs through to a Docker container. I know it’s possible and likely not that hard but I put a priority on simplifying what I need to learn at any one time. I installed Ollama by going to the download page of their website, downloading the Windows installer from there, and running it.

For my current use, however, the only thing I really needed to do was get Ollama to download a model. Because my hardware is relatively limited (a 12GB RTX 3060 and a 6GB GTX 1660 Super) I decided to try to go with a relatively small model from list of models that are supported. For general purpose queries like writing blog posts I chose the 8 billion parameter version of Llama 3.1. To get Ollama to download this model I ran:

ollama pull llama3.1:8bInstalling Docker Desktop

The Docker Desktop installer can be downloaded from their main site. Run the installer once it’s downloaded. Given that this post is not about Docker directly and is geared toward technically inclined folks I’m going to presume that you’re able to get it installed and move on to the next step.

Getting Open WebUI

Open WebUI is pretty easy to get going once you’ve got Docker Desktop installed. There are instructions here but, to save time, you can run the following to get it running with the instance of Ollama running on our machine. For options with Ollama running elsewhere see the instructions page.

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:mainOnce the container is up and running you can access it via http://localhost:3000

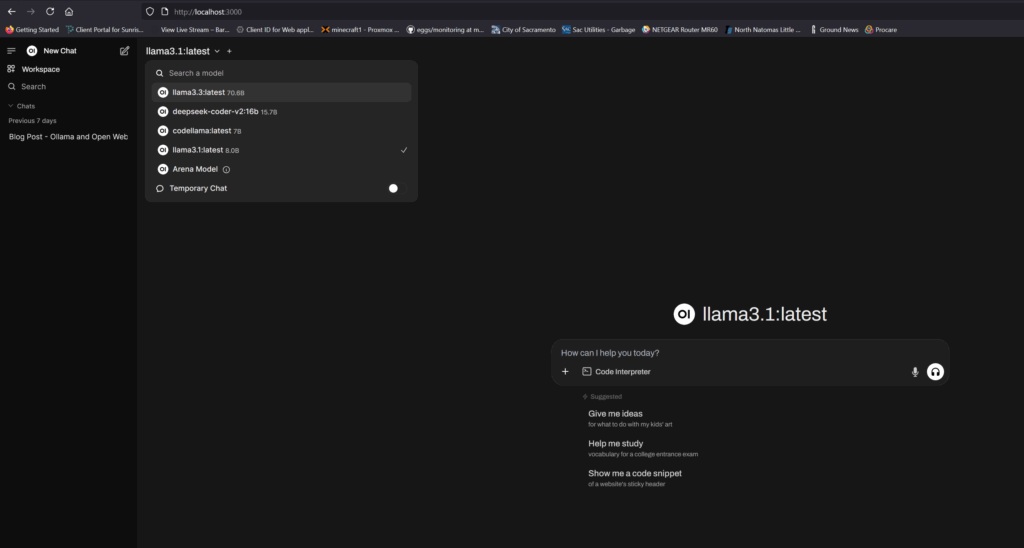

What does the Open WebUI page look like?

The main page is pretty simple. There is a drop down at the top left that will allow you to choose the model you want to query against, a text box to query in the center, and a banner along the left allow you to save conversations. Note that the models you see here are ones I’ve already downloaded via Ollama in hopes that I might actually use them at some point.

Given that I am still in the beginning of my journey with LLMs I am not going to go into any of the many seeings that are possible with this tool.

How did it work for me?

I used the llama3.1 8 billion parameter model to try to write this post. I used the following query to get things kicked off hoping that it would produce a guide to installing the tools that looked somewhat like how I write my posts.

Write a blog post in the style of the posts on https://blog.taylorbuiltsolutions.com/ about installing Ollama, Docker Desktop, and open-webui on Windows so that someone can get started using LLMs

The output was … ok. There were some errors in the installation guide (which I’ll discuss below) and the initial text produced didn’t include any summary about why someone might want to even use these tools. I was able to get it to produce this with further queries but the text produced didn’t sound like how I’d want to say things.

In the end I still ended up writing much of the post myself. The generated content, however, did provide a decent jumping off point. One of the points of research for me is to see if I can get information from live websites (like Github) so that the content produced by the LLM is accurate.

Errors in the LLM generated post

The first issue I noticed is that it suggested that the reader use the command line to install Ollama. This isn’t a big issue and is certainly possible from Windows though it isn’t likely the first thing most Windows users would do (albeit that developers would be likely to). It, however, had the script section tagged as Bash and was using curl (again, not a big stretch but definitely something that would be done by default on Linux and not Windows). I prompted the LLM to indicate that I was using Windows and to use powershell and it changed the box to use powershell and Invoke-WebRequest. This is ok so far.

The second major problem in the post is that it got the github address and file to download totally wrong. The address and file that it recommended I download is:

https://github.com/ollama-dev/ollama/releases/download/latest/ollama.exe

The actual github URL to the ollama repository is: https://github.com/ollama/ollama/

Additionally, there is no ollama.exe on the releases page. There are zip or tarballs for different operating systems. There is an installer for Windows but it is called OllamaSetup.exe. Now this is the point where you can point out “you just didn’t frame your query correctly!” and you’re very likely correct. I need to learn more about how to query properly and/or get it to read websites and return the correct data.

Conclusion

Using LLMs is definitely going to be an interesting learning process and I’m excited to continue with it. The generated content for this post was not the most useful but it did provide me a jumping off point. There are more topics for posts that have been rattling around in my head that I intend to practice querying with. That being said I doubt I will take posts wholesale. I’m still too much of a control curmudgeon to want to allow an LLM to speak for me.

I also intend to use some of the coding models to work on generating a server maintenance tool I’ve wanted to write. It will provide me a chance to practice querying and learn modern C++ (so I don’t continue to stay in the dark ages). So, more to come soon!